Operational Excellence

In the near future, we plan to complement our measurements with an additional assessment of service availability. For the Netherlands, we present this crowdsourced approach as a case study this year– it will become part of the overall scoring next year.

An additional important aspect of mobile service quality – above performance and measured values –is the actual availability of the mobile networks. Obviously, even the best performing network is only of limited benefit to its users, if it is frequently impaired by outages or disruptions.

Therefore, P3 has been looking into additional methods for the quantitative determination of network availability, collecting data via crowdsourcing. This method must however not be confused with the drivetests described on the previous pages. We are convinced that crowdsourcing will further enhance our benchmarks. Drivetesting has advantages as a very controlled environment. Crowdsourcing enables statements about network availability on a larger scale in terms of time and geography.

However, when it comes to diagnose the sheer availability of the respective mobile networks, a crowdsourcing approach can provide additional insights. Therefore, P3 has developed an app-based crowdsourcing mechanism in order to assess how a large number of mobile customers experience the availability of their mobile network. We call this aspect “operational excellence“.

In the future, we envision this consideration to become part of the overall scoring of our mobile network tests. But as we have been conducting this method in the Netherlands only for a couple of months and have not yet reached statistically firm numbers of users for all tested networks within the months considered, we have decided to present the results as a case study this year.

So, the resulting observations are not yet included in the score of our network test. Nonetheless, in next year‘s P3 connect Mobile Benchmark in Sweden, we expect our crowdsourcing results to become a part of the overall test score. The P3 and connect Mobile Benchmark will then be the only mobile network test which combines both aspects (drive testing and crowdsourcing) and thus provides the most comprehensive view on network performance.

So, the resulting observations are not yet included in the score of our network test. Nonetheless, in next year‘s P3 connect Mobile Benchmark in the Netherlands, we expect our crowdsourcing results to become a part of the overall test score. The P3 connect Mobile Benchmark will then be the only mobile network test which combine drivetesting and crowdsourcing, thus delivering the most comprehensive view on network performance.

As a matter of fact, we envision to even extend the scope of our crowdsourcing results. In addition to reliability, also coverage reach and quality as well as users‘ best throughput (average and top values) will become part of the crowd score.

OPERATIONAL EXCELLENCE AT A GLANCE

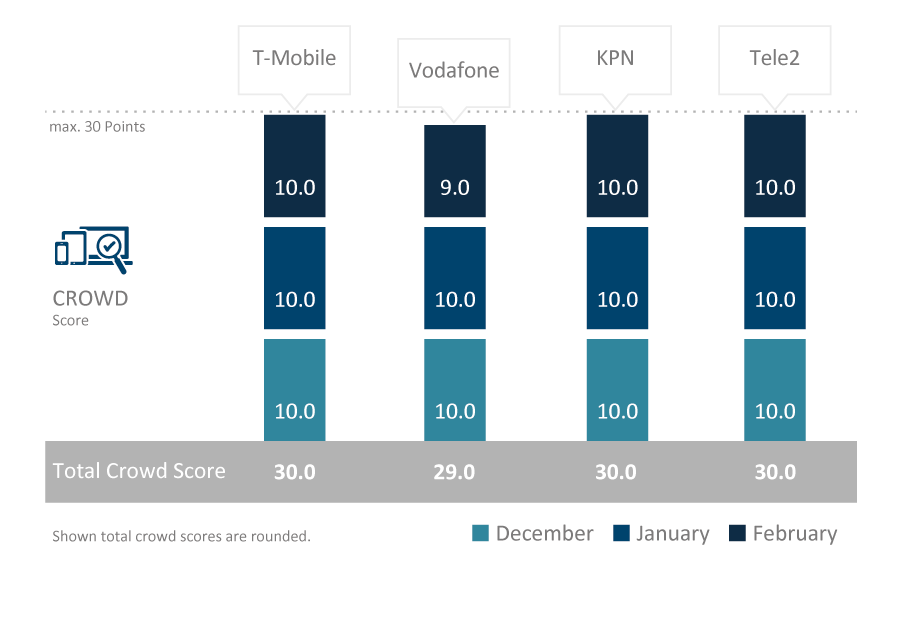

Considering December 2017, January 2018 and February 2018, we could only observe one degradation of one hour in the Vodafone network in February 2018 (see chart below). According to our planned crowdsourcing methodology and evaluation (see page 13), this would have resulted in a loss of one (rounded) point for the February score of Vodafone, while the three other candidates would have remained unaffected.

Crowdsourcing shows: Dutch networks very reliable

For this case study, we have taken a closer look at the data network availability in the Netherlands for the months preceding and including our measurement tours – specifically December 2017, January 2018 and February 2018. The underlying methodology is described in detail on the right-hand side.

An in-depth analysis of our crowdsourcing data shows that the Dutch networks are all in all very stable and reliable. The only degradation that we could actually observe, happened on February 22nd at 13:00 h in the Vodafone network. This operator suffered an observable service degradation on this day that lasted no more than one hour.

This one hour of limited availability costs Vodafone one point in the simulated crowd score for February. KPN, T-Mobile und Tele2 did not suffer any observable degradations in the period under consideration.

This minor reduction of service availability would have had only a limited impact to the overall result even if we already had included them into our scoring. Even when taking into account the conversion of maximum achievable points to a lower total in order to “make room“ for the crowdsourcing points, the overall ranking would not have changed and Vodafone would have only suffered a loss of one point.

However, with close point distances between the winning contenders as they are typical in highly competitive markets with extremely high performance levels such as the Netherlands, multiple or prolonged degradations clearly would have the potential to alter the final ranking order of our benchmark. We are already excited to put this new component of our testing procedures officially into effect starting with the P3 connect Mobile Benchmark in the Netherlands next year.

CROWDSOURCING METHODOLOGY

The mechanisms of our crowdsourcing analyses carefully distinguish actual service degradations from simple losses of network coverage. Also, the planned scoring model considers large-scale network availability as well as a fine-grained measurement of operational excellence.

For the crowdsourcing of operational excellence, P3 considers connectivity reports that are gathered by background diagnosis processes included in a number of popular smartphone apps. While the customer uses one of these apps, a diagnosis report is generated daily and is evaluated per hour. As such reports only contain information about the current network availability, it generates just a small number of bytes per message and does not include any personal user data.

Additionally, interested parties can deliberately take part in the data gathering with the specific ”U get“ app (see below).

In order to differentiate network glitches from normal variations in network coverage, we apply a precise definition of “service degradation“: A degradation is an event where data connectivity is impacted by a number of cases that significantly exceeds the expectation level. To judge whether an hour of interest is an hour with degraded service, the algorithm looks at a sliding window of 168 hours before the hour of interest. This ensures that we only consider actual network service degradations differentiating them from a simple loss of network coverage of the respective smartphone due to prolonged indoor stays or similar reasons.

In order to ensure the statistical relevance of this approach, a valid assessment month must fulfil clearly designated prerequisites: A valid assessment hour consists of a predefined number of samples per hour and per operator. The exact number depends on factors like market size and number of operators.

A valid assessment month must be comprised of at least 90 percent of valid assessment hours (again per month and per operator). As these requirements were only partly met for the period of this report, we publish the Dutch crowdsourcing as a case study.

Sophisticated scoring model

The relevant KPIs are then based on the number of days when degradations occurred as well as the total count of hours affected by service degradations. In the scoring model that we plan to apply to the gathered crowdsourcing data, 60 per cent of the available points will consider the number of days affected by service degradations – thus representing the larger-scale network availability. An additional 40 per cent of the total score is derived from the total count of hours affected by degradations, thus representing a finer-grained measurement of operational excellence.

Each considered month is then represented by a maximum of ten achievable points. The maximum of six points (60 per cent) for the number of affected days is diminished by one point for each day affected by a service degradation. One affected day will cost one point and so on until six affected days out of a month will reduce this part of a score to zero.

The remaining four points are awarded based on the total number of hours affected by degradations. Here, we apply increments of six hours: Six hours with degradations will cost one point, twelve hours will cost two points etc., until a total number of 24 affected hours will lead to zero points in this part of the score.

Participate in our crowdsourcing

Everybody interested in being a part of our “operational excellence” global panel and obtaining insights into the reliability of the mobile network that her or his smartphone is logged into, can most easily participate by installing and using the “U get” app. This app exclusively concentrates on network analyses and is available under uget-app.com. “U get” checks and visualises the current mobile network performance and contributes the results to our crowdsourcing platform. Join the global community of users who understand their personal wireless performance, while contributing to the world’s most comprehensive picture of the mobile customer experience.