TESTING METHODOLOGY

The methodology of the umlaut connect Mobile Benchmark is the result of more than 15 years of testing mobile networks. Today, network tests are conducted in more than 80 countries. Our methodology was carefully designed to evaluate and objectively compare the performance and service quality of mobile networks from the users’ perspective.

The umlaut connect Mobile Benchmark Spain comprises of the results of extensive voice and data drivetests and walktests as well as a sophisticated crowdsourcing approach.

DRIVETESTS AND WALKTESTS

The drivetests and walktests in Spain took place in October 2020.

All samples were collected during the day, between 8.00 a.m. and 10.00 p.m. The network tests covered inner-city areas, outer metropolitan and suburban areas. Measurements were also taken in smaller towns and cities along connecting highways. For the drive tests, two cars were operated by two rotating teams per each car, working on shifts. The connecting routes between the cities covered about 3,800 kilometres per car – 7,540 kilometres for both cars. In total, the two vehicles together covered about 11,540 km.

The combination of test areas has been selected to provide representative test results across

the Spanish population. The areas selected for the 2020 test account for 11.8 million people, or roughly 25.4 per cent of the total population of Spain. The test routes and all visited cities and towns are shown in the “Results” section of this report.

The two drivetest cars were equipped with arrays of Samsung Galaxy S10 and S20+ smartphones for the simultaneous measurement of voice and data services.

VOICE TESTING

One Galaxy S10 per operator in each car was used for the voice tests, setting up test calls from one car to another. The walktest team also carried one Galaxy S10 per operator for the voice tests. In this case, the smartphones called another Galaxy S10 as a stationary counterpart. The audio quality of the transmitted speech samples was evaluated using the HD-voice capable and ITU standardised so-called POLQA wideband algorithm. All smartphones used for the voice tests were set to VoLTE preferred mode.

In the assessment of call setup times we also rated the so-called P90 value. Such values specify the threshold in a statistical distribution, below which 90 per cent of the gathered values are ranging. For speech quality, we published the P10 value (10 per cent of the values are lower than the specified threshold), because in this case higher values are better.

In order to account for typical smartphone-usage scenarios during the voice tests, background data traffic was generated in a controlled way through injection of 100 KB of data traffic (HTTP downloads). We also evaluated the so-called Multirab (Multi Radio Access Bearer) Connectivity. This value denominates whether data connectivity is available during the phone calls. The Voice scores account for 32 per cent of the total results.

DATA TESTING

Data performance was measured by using four more smartphones in each car – one per operator In Car 1, this was another Galaxy S10, set to 4G preferred mode. Car 2 and the walktest team carried one Galaxy S20+ per operator, set to 5G preferred mode – enabling 5G connectivity wherever available.

For the web tests, they accessed web pages according to the widely recognised Alexa ranking.

In addition, the static “Kepler” test web page as specified by ETSI (European Telecommunications Standards Institute) was used. In order to test the data service performance, files of 5 MB and 2.5 MB for download and upload were transferred from or to a test server located in the cloud. In addition, the peak data performance was tested in uplink and downlink directions by assessing the amount of data that was transferred within a seven seconds time period.

The evaluation of YouTube playback takes into account that YouTube dynamically adapts the video resolution to the available bandwidth. So, in addition to success ratios and start times, the measurements also determined average video resolution.

All the tests were conducted with the best-performing mobile plan available from each operator. Data scores account for 48 per cent of the total results.

CROWDSOURCING

Additionally, umlaut conducted crowd-based analyses of the Spanish networks which contribute

20 per cent to the end result. They are based on data gathered between mid-May and the end of October 2020.

For the collection of crowd data, umlaut has integrated a background diagnosis processes into 800+ diverse Android apps. If one of these applications is installed on the end-user’s phone and the user authorizes the background analysis, data collection takes place 24/7, 365 days a

year. Reports are generated for every hour and sent daily to umlaut‘s cloud servers. Such reports occupy just a small number of bytes per message and do not include any personal user data. This unique crowdsourcing technology allows umlaut to collect data about real-world experience wherever and whenever customers use their smartphones.

QUALITY OF BROADBAND SERVICE

For the assessment of network coverage, umlaut applies a grid of 2 x 2 km tiles (so called evaluation areas or EAs) over the test area. For each tile, a minimum number of users and measurement values must be available. In order to assess the Coverage Excellence, umlaut awards one point if the considered network provides 4G or 5G coverage in an EA. Another point is awarded to a candidate for each competitor who provides a smaller or no share of broadband usage. In a country with four contenders, a candidate can thus reach up to four points per tile: one for broadband coverage and three additional ones for “beaten“ competitors. The assessment then relates the obtained points to the total possible points for Coverage Excellence.

In addition, we consider the Time on Broadband. It reveals how often a single user had 4G or 5G reception in the observation period – independent from the EAs in which the samples were obtained. In order to calculate this, umlaut puts the number of samples with 4G/5G coverage into relation to the total number of all samples. Coverage Excellence and Time on Broadband results each provide 50 per cent of the points for the Quality of Broadband Service. Important: The percentages determined for both parameters reflect the respective degrees of fulfillment. They do not correspond to the percentage of 4G/5G coverage of an area or population.

DATA RATES AND LATENCY

Additionally, umlaut investigates the Data rates and Latencies that were actually available to each user. The examination of these parameters is independent from the EAs and thus concentrates

on the experience of each single user. Samples which were for instance obtained via WiFi or with the smarthphone‘s flight mode being active, are filtered from the data pool before further analysis.

In order to take the fact into account that many mobile phone tariffs limit data rates, umlaut has defined speed classes which are corresponding to particular applications: For Basic Internet,

2 Mbps are sufficient. HD Video requires 5 Mbps. And for UHD Video the minimum is 20 Mbps.

In order for a sample to count as valid, a minimum amount of data must have been transmitted within a 15 minute period. The same principle also applies to the assignment of a data packet‘s

latency to the according appli-cation-based classes: Roundtrip times up to 100 ms are sufficient

for OTT Voice, 50ms and faster qualify a sample for Gaming.

In the assessment, umlaut assigns the data rate and latency observed in a sample to one of these performance classes. Then, Basic Internet accounts for 60 per cent of the Data

Rate score, HD Video for 30 per cent and UHD Video for 10 per cent (see table on the right-hand side). The Latency score incorporates OTT Voice with a share of 80 per cent, Gaming with a share of 20 per cent.

umlaut‘s fleet of test cars is equipped with up-to-date test smartphones. The phones on board are operated and supervised by a unique control system.

One Samsung Galaxy S10 per operator was used for the voice measurements and another Galaxy S10 for half of the data measurements. In the second car and in the walktest team‘s backpack a Galaxy S20+ was used and set to “5G preferred“.

The umlaut staff analysed hundreds of thousands of measurement va- lues during and after the tests.

ConclusioN

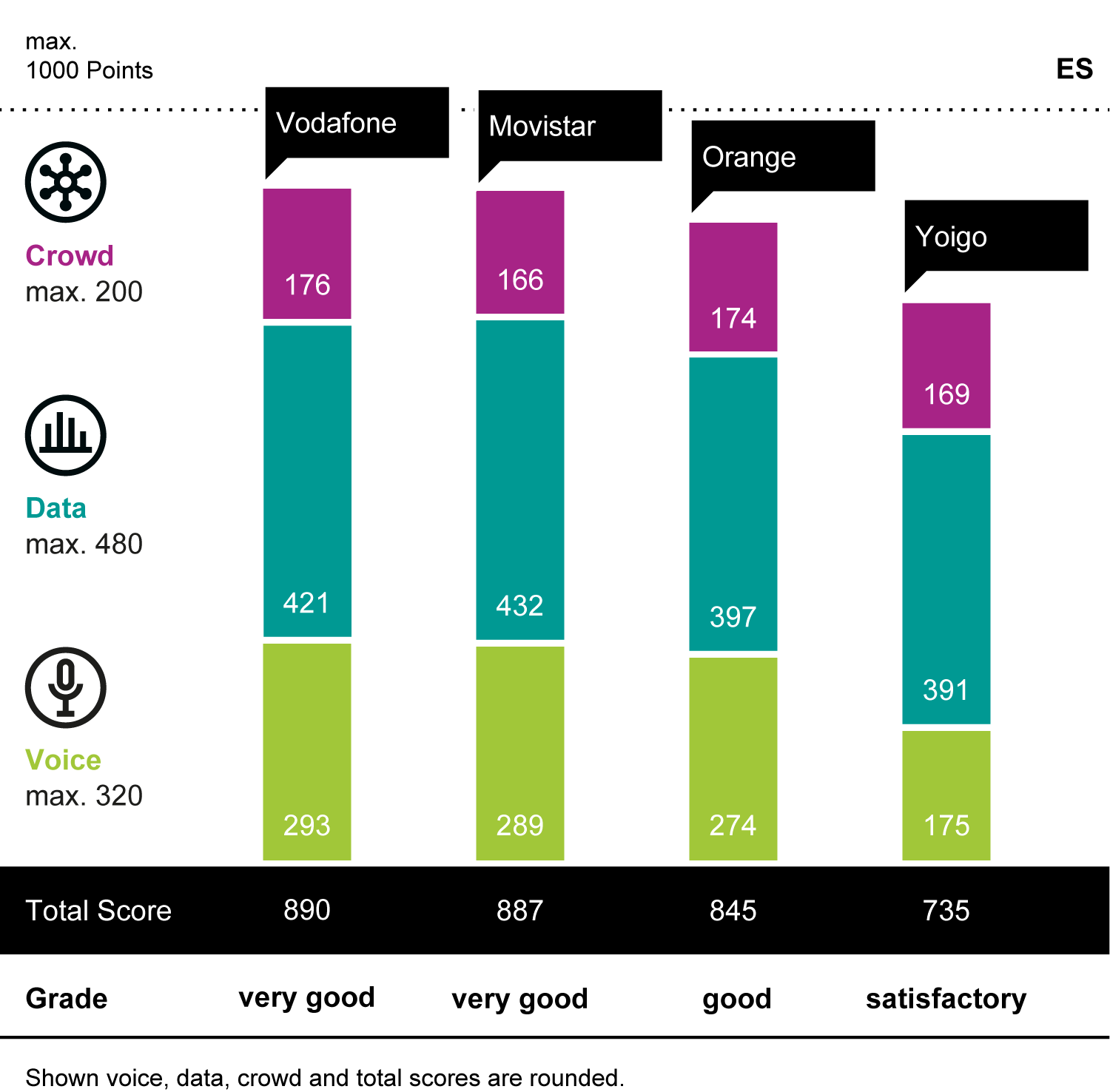

Vodafone wins for the sixth time in a row. Movistar manages to fight itself up to the second rank and follows at a distance of only three points behind the winner, achieving an impressive score improvement over the previous year. Orange ranks third with a good result and Yoigo fourth with the grade “satisfactory.“

The overall winner of the umlaut connect Mobile Benchmark Spain is Vodafone – for the sixth time in a row. But Movistar comes very close at a distance of only three points to the winner Vodafone. This second rank is gained by a massive score improvement of 63 points compared to last year‘s result – a large part of it due to improvements in the Voice category facilitated by the introduction of VoLTE. In contrast, the other three contenders could not maintain their score levels from the previous year and have lost points. Also, Movistar is taking the lead in the Data category.

Orange falls back to the third rank from 2019‘s second position and achieves the grade “good“ (2019: “very good“) – but ranks second in the Crowdsourcing category, showing strong results in the crowdsourced user download speeds and also showing comparably short latencies.

Yoigo ranks fourth, falling back from 2019‘s “good“ to the grade “satisfactory“ this year. This is mainly due to some shortcomings in the Voice category – in the Data category Yoigo scores closely behind Orange and even outperforms the overall second-ranking Movistar in the Crowdsourcing category – achieving the fastest crowdsourced user download speeds together with Orange.

But what is most important this year: All four Spanish operators manage to provide mostly stable connections to their users - even in today‘s particularly demanding times.

For the sixth time in a row, Vodafone is the winner of our Mobile Benchmark in Spain.

The operator takes the win due to leading in the Voice and Crowdsourcing categories and probably also by offering the largest 5G footprint in Spain. All of this results in an appealing combination for the customers.

Movistar has fought its way up to the second rank following at a distance of only three points behind the winner and showing an impressive score improve-

ment of 63 points of last year‘s result. Also, Movistar takes the lead in the Data category and achieves the best scores in the drivetests performed in cities, towns and on the roads.

Orange ranks third with an overall good result. While the operator scores in the midfield in our drivetests and walktests, it

shows particularly strong results in the Crowdsourcing category, especially due to the highest crowdsourced user download speeds in our comparison

and also comparably good latencies.

Spain‘s smallest operator ranks fourth, achieving the overall grade “satisfactory“. In comparison to last year‘s results, Yoigo lost some points. Still, the

operator scores closely behind the third-ranking Orange in the Data category and even outperforms the overall second-

ranking Movistar in the Crowdsourcing category.